1. Introduction to TSUBAME4.0¶

1.1. System architecture¶

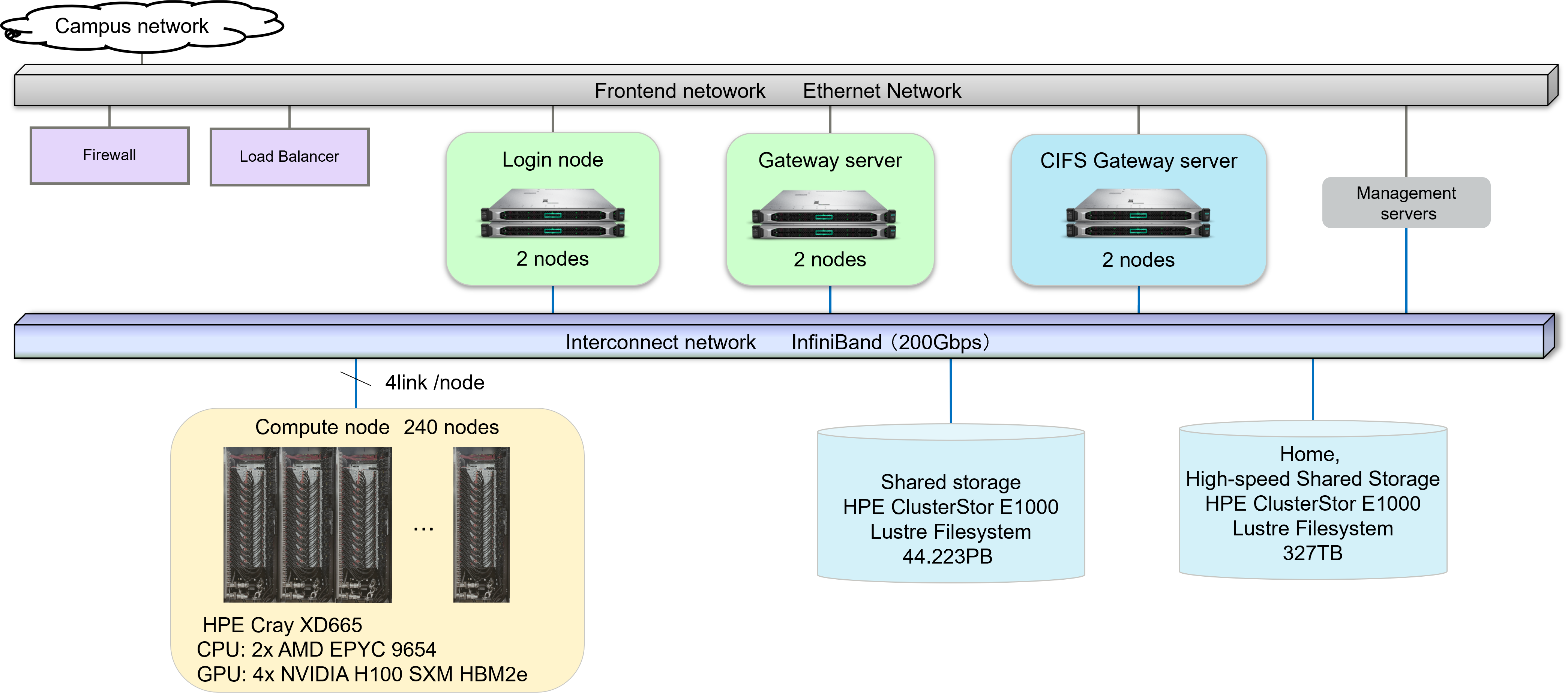

This system is a shared computer that can be used from various research and development departments at Tokyo Institute of Technology.

The system's theoretical peak double precision performance is 66.8PFLOPS, theoretical peak half precision performance is 952PFLOPS, total main memory is 180TiB, total SSD capacity is 327TB and total HDD capacity is 44.2PB.

Each compute node and storage system are connected to the high-speed network by InfiniBand and are connected to the internet at a speed of 100Gbps via SINET6 directry from Suzukakedai campus, Tokyo Institute of Technology.

The system architecture of TSUBAME4.0 is shown below.

1.2. Compute node configiration¶

The computing node of this system a large scale cluster system consisting of HPE Cray XD665 240 nodes.

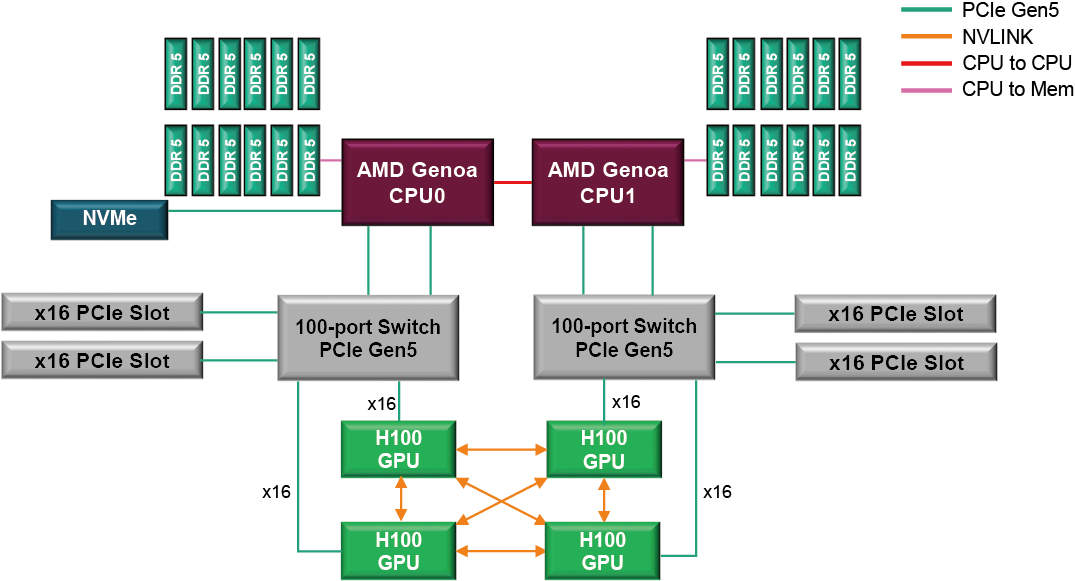

One compute node is equipped with two AMD EPYC 9654 (96 core, 2.4GHz), and the total number of cores is 23,040.

the main memory capacity is 768GiB per compute node, total memory capacity is 180TiB.

Each compute node has 4 port of InfiniBand NDR200 interface and consistutes a fat tree topology by InfiniBand switch.

The spacifications of each TSUBAME4.0 node are as follows:

| Components | Model/Specfication |

|---|---|

| CPU | AMD EPYC 9654 2.4GHz x 2 Socket |

| Core/Thread | 96 core / 192 thread x 2 Socket |

| Memory | 768GiB (DDR5-4800) |

| GPU | NVIDIA H100 SXM5 94GB HBM2e x 4 |

| SSD | 1.92TB NVMe U.2 SSD |

| Interconnect | InfiniBand NDR200 200Gbps x 4 |

1.3. Software configuration¶

The operating system (OS) of this system has the following environment.

- RedHat Enterprise Linux Server 9.3

The OS configuration is dynamically changed according to the service execution configuration.

For application software that is available in this system, please refer to Commercial applications and Freeware.

1.4. Storage configuration¶

This system has high speed / large capacity storage for storing various simulation results.

On the compute node, the Lustre file system is used as the high-speed storage area, the large-scale storage area, Home directory and common appplicaion deployments.

In addition, 1.92TB NVMe SSD is installed as a local scratch area in each compute node.

The file systems available in this system are listed below:

| Usage | Mount point | Capacity | Filesystem |

|---|---|---|---|

| High-speed storage area Home directory (SSD) |

/gs/fs /home |

372TB | Lustre |

| Large-scale storage area Common application deployment (HDD) |

/gs/bs /apps |

44.2PB | Lustre |

| Local scrach area (SSD) | /local | Each node 1.92TB | xfs |

For more details about local scrach area in each resorce type, please refer to Available resource type.